As technology evolves, the way games are developed and played continues to change. These days, language models (LLMs) are not only conversation partners within games but also help players with gameplay. But have you ever wondered:

“Is this LLM actually good at playing games?”

To answer this question properly, the Research Department of KRAFTON’s Deep Learning Division stepped up. They developed a benchmark to evaluate how well an LLM-based agent can actually play a game—and how it makes decisions in the process. The benchmark is called Orak, a name inspired by the Korean word for “오락,” meaning “entertainment” or “arcade,” evoking a warm sense of nostalgia among Koreans. The name alone is already quite charming and intriguing, isn’t it?

Today, we’re sitting down with Dongmin Park from KRAFTON’s Data-centric Deep Learning Research Team, who led this project, to hear in detail about how Orak came to life.

(Photo 1) The Orak Benchmark Team

Q. Hello! First of all, congratulations on the launch of Orak—and thank you for all your hard work. Let’s start with the basics: what inspired the Orak benchmark project, and what problem were you trying to solve?

Recently, there’s been a surge in efforts to make LLMs play actual games or assist within in-game scenarios. Projects like PUBG Ally and Smart Zoi are prime examples. But the problem is, there’s still no objective standard for determining whether an LLM is actually good at playing games.

It’s not just about inference skills or conversational ability — we need to assess how well the model interprets complex game environments and makes decisions based on them. That’s what it takes to be a truly gaming-capable LLM. With that realization, the Orak project was launched internally at KRAFTON. We decided to build an evaluation framework ourselves that would address both the technical needs and practical considerations of such systems.

Q. By the way, the name is so cute. What’s the story behind ‘Orak’? I think I might have a guess...

Korean readers probably picked up on it right away — Orak is derived from the Korean word “오락”, which means “entertainment” or “arcade.” While it’s not a word we use often these days, for those who grew up during the arcade era, it might feel even more nostalgic and familiar than the word “game.”

We chose this name because it feels natural and easy to pronounce for English-speaking researchers too, while also intuitively capturing the essence of the project.

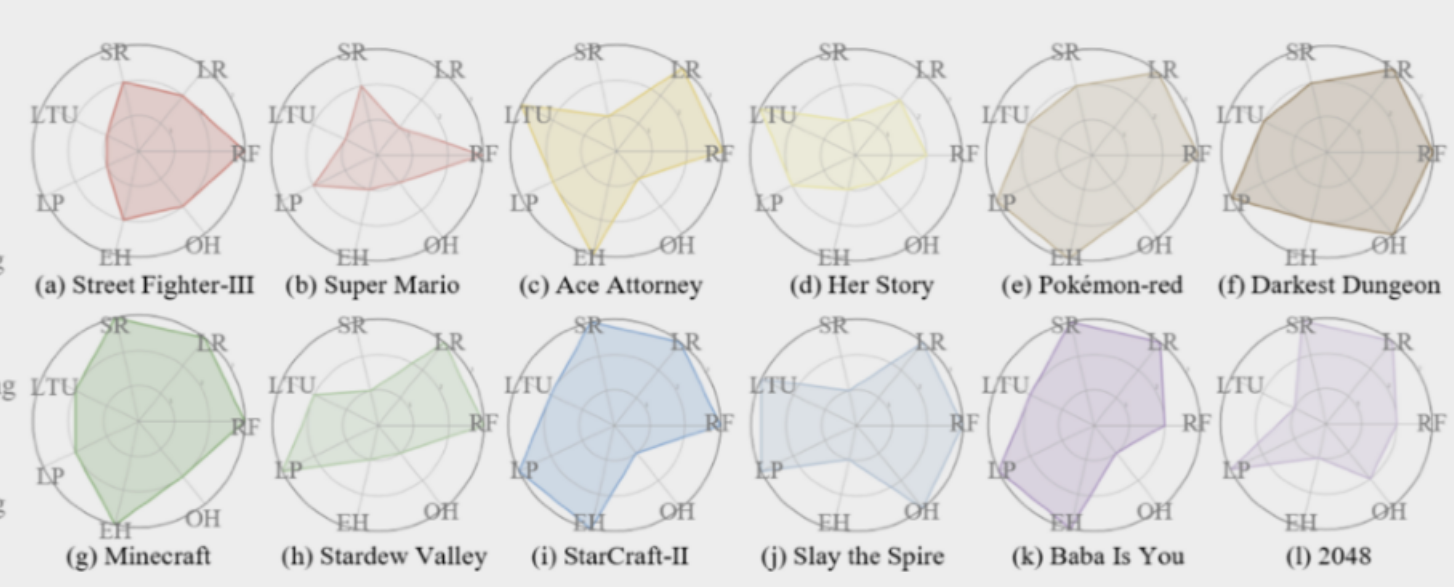

(Photo 2) The 12 games used in the Orak benchmark study

Q. Hopefully, the name Orak will go down in history as an iconic milestone! What was the experimental setup like for Orak? And what are the key design elements?

Orak is a benchmark built using 12 different video games. These games were selected not only for their symbolic and meaningful status within the industry, but also to reflect a diversity of genres. The goal wasn’t just to make these games playable by an LLM; each game environment was meticulously designed to assess how effectively the model can perceive a situation and make decisions within it.

We also designed the interface based on MCP (Model Context Protocol), which allows a wide range of LLMs to participate in the benchmark easily. The framework was built with both accessibility and scalability in mind. Thanks to this structure, researchers can obtain reliable and repeatable results.

Q. It sounds like a project with a tremendous amount of thought behind it. So how do you evaluate an agent's decision-making? I'm also curious about the technically important aspects.

It’s not just about whether the model “wins” the game — what really matters is how it plays. For each game environment, we defined a set of evaluation metrics to quantitatively measure how well the agent understands the situation and chooses appropriate actions.

The MCP structure I mentioned earlier forms the foundation for this kind of detailed evaluation. We also emphasized user-friendly design. We built a reusable interface so researchers could quickly and easily connect and test different models. This setup made it possible to evaluate and compare various models under a unified standard.

Q. Just listening to this already makes it sound like a refreshingly novel piece of research. What makes the Orak benchmark stand out from other evaluation systems? Please brag a little.

The biggest distinction is that the LLM has to actually play the game. It’s not just about testing knowledge — we evaluate the entire process, from interpreting the situation to making decisions and taking action. This allows for a much deeper assessment of the cognitive capabilities of an AI agent.

Another strength is that all the games used in the evaluation are well-known commercial titles. Their popularity makes it easier for researchers to interpret the results and adapt them to their own work. Most importantly, Orak evaluates the model’s strategic thinking based on the entire gameplay process and flow. In that sense, it takes a fundamentally different approach compared to traditional benchmarks.

We also provide fine-tuning datasets for LLMs, which we believe contributes to advancing research in gaming agents even further.

(Photo 3) Evaluation graphs for the 12 games

Q. This must have been a project that was impossible to complete alone. Were there any memorable moments of teamwork during the process?

Because collaboration was so essential to this project, the teamwork really stands out in my memory. Each team member was in charge of one specific game, and we all agreed on the overall MCP structure so that everything was implemented in a consistent way. We held regular weekly meetings to share issues and exchange feedback.

One especially memorable moment was when we worked on enabling multiple games to be played by the LLM using modding tools. It was repetitive and technically challenging, but everyone pitched in enthusiastically, and we ultimately made it work. It wouldn’t have been possible without the dedication of the team. Most of all, I think the way we respected each member’s expertise and coordinated accordingly really elevated the quality of the final result.

Q. Of course, anything new tends to come with its share of difficulties. Were there any unexpected problems or trial-and-error moments?

Toward the end of the project, we ran into a few unforeseen issues. At certain stages, it became difficult to proceed as originally planned, and we had to quickly come up with alternatives. One of those moments came right before we were about to submit our paper to NeurIPS — we found out we wouldn’t be able to work directly with the fine-tuned weights of the model.

Fortunately, we were able to quickly assess the situation and take action internally, and in the end, we were still able to secure enough data. Thanks to that, we managed to wrap up the experiments without major delays.

Q. Lastly, is there anything you’d like to say to the team members who worked with you on this project?

Thank you all so much for your incredible effort. Personally, I believe that what we’ve created with Orak is the most practical and well-rounded gaming LLM benchmark that exists today. I hope this benchmark becomes the starting point for much more research and development to come — and that we can all feel proud to have been there at the beginning of it. Every step of this journey was meaningful, and I hope this experience becomes a valuable asset for each of your careers moving forward.

Through this interview, we were able to gain a deeper appreciation for how the Orak project goes beyond a simple technical experiment — offering a meaningful standard for evaluating the actual decision-making and behavioral capabilities of language model-based agents. The fact that it’s designed to comprehensively assess an LLM’s situational awareness and reasoning skills across diverse game environments is particularly refreshing.

The results of the Orak project are now available in a published paper. If you’re interested in LLM research in the context of gaming, be sure to check out the links below for more details! 😊

😎 Orak Leaderboard

👨💻 Orak GitHub

✅ Full list of KRAFTON AI research papers